Scan AI

PNY 3S AI-optimised storage arrays

Today’s AI servers consume and analyse data at much higher rates than many traditional storage solutions can deliver, resulting in low GPU utilisation and dramatically extending training times decreasing productivity. PNY, NVIDIA’s global partner, has been developing solutions from the ground up for AI workloads and optimised for the NVIDIA DGX range of AI appliances. Here at Scan AI we have worked with PNY and NVIDIA to deliver a range of approved POD architectures featuring either PNY 3S-1050 or PNY 3S-2450 arrays alongside a single or multiple DGX A100 appliances. These PODs are fully designed, tested and configured to deliver the ultimate in AI training performance at a price point previously unheard of.

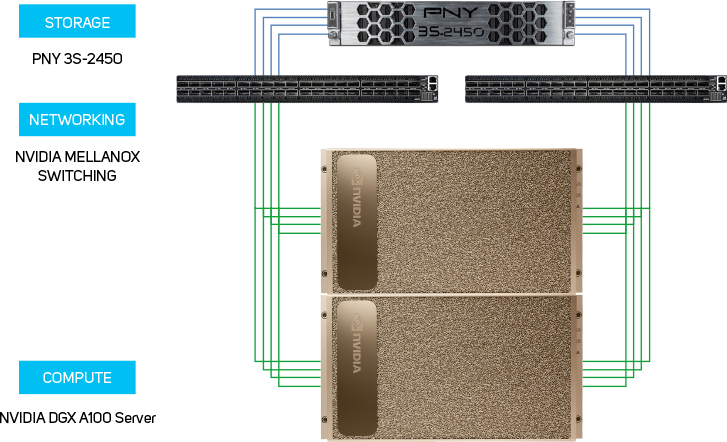

Pod 1

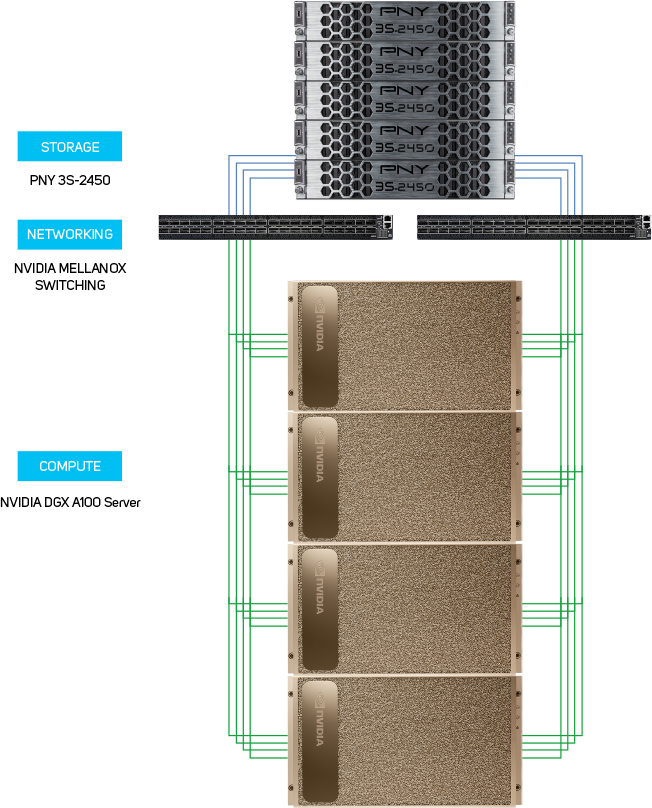

Pod 2

In solutions where multiple DGX’s are used, the storage requires a sharable filesystem, which traditionally has been both complex and expensive to implement at high performance. PNY’s appliances provide a simple yet blisteringly fast sharable solution with no need for multiple storage nodes or controllers, everything needed is contained and automated within a single appliance. Yet scaling of storage arrays is also simple as your demands increase - with a 1U 3S-1050 delivering up to 150TB and the 2U 3S-2450 capable of housing a massive 345TB, starting small and scaling as needed is simple. And should a project require larger capacities, additional expansion units are available.

Maximum GPU Utilisation

Scan AI PODs are pre-configured with Run:ai Atlas software which acts to decouple data science workloads from the underlying GPU hardware. By pooling resources and applying an advanced scheduling mechanism to data science workflows, Run:ai greatly increases the ability to fully utilise all available resources, essentially creating unlimited compute. Data scientists can then increase the number of experiments they run, speed time to results and ultimately meet the business goals of their AI initiatives.

Centralise AI

Pool GPU compute resources so IT gains visibility and control over resource prioritisation and allocation

Maximise Utilisation

Automatic and dynamic provisioning of GPUs breaks the limits of static allocation to get the most out of existing resources

Deploy to Production

An end-to-end solution for the entire AI lifecycle, from build to train to inference, all delivered in a single platform

Your Choice of Scan AI PODs

| Pod 1 | Pod 2 | |

|---|---|---|

| Performance | *** | **** |

| Compute | 2x NVIDIA DGX-A100 320GB | 4x NVIDIA DGX-A100 320GB |

| Storage | PNY 3S-2450 105TB Usable, Expandable up to 345TB | PNY 3S-2450 210TB Usable, Expandable up to 345TB |

| Networking | 2x NVIDIA Mellanox MSN3700-VS2FC | 2x NVIDIA Mellanox MSN3700-VS2FC |

| Rack Space | Full Rack | Full Rack |

| Run:AI | ✓ | ✓ |

| Installation | ✓ | ✓ |

| 3-year Support | ✓ | ✓ |

| Cost | £££ | ££££ |