The Oxford Robotics Institute (ORI) is built from collaborating and integrated groups of researchers, engineers and students all driven to change what robots can do for us. Their current interests are diverse, from flying to grasping - inspection to running - haptics to driving, and exploring to planning. This spectrum of interests leads to researching a broad span of technical topics, including machine learning and AI, computer vision, fabrication, multispectral sensing, perception and systems engineering.

Leveraging Translational Invariance of the Fourier Transform for Efficient and Accurate Radar Odometry

Project Background

In recent years, Radar Odometry (RO) has emerged as a valuable alternative to lidar and vision based approaches due to radar’s robustness to adverse conditions and long sensing horizon. However, noise artefacts inherent in the sensor imaging process make this task challenging. Previous studies [1] first demonstrated the potential of radar as an alternative to lidar and vision for this task and since then has sparked significant interest in RO. Masking by Moving (MbyM), provides robust and accurate RO measurements through an exhaustive correlative search across discretised pose candidates. However, this dense search creates a significant computational bottleneck which hinders real-time performance when high-end GPUs are not available. While sparse point-based RO methods [1-7] have shown significant promise, a recently established dense approach[8] has offered new opportunities for this problem setting - by masking radar observations using a deep neural network (DNN) before adopting a traditional brute-force scan matching procedure, MbyM learns a feature embedding explicitly optimised for RO. As robust and interpretable as a traditional scan matching procedure, MbyM was able to significantly outperform the previous state of the art [1]. However, as ORI demonstrate, MbyM in its original incarnation is unable to run in real-time on a laptop at all but the smallest resolutions and not at all on an embedded device. The requirement for a high-end GPU for real-time performance represents a significant hindrance for deployment scenarios where the cost or power requirements of such hardware is prohibitive.

Project Approach

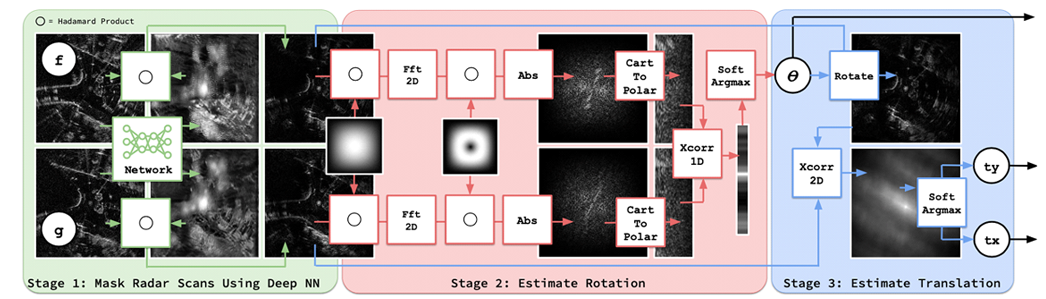

In this case study, ORI proposed a number of modifications to the original MbyM approach which result in significantly faster run-time performance, enabling real-time performance at higher resolutions on both CPUs and embedded devices. In particular, instead of performing a brute-force search over all possible combinations of translation and angle, the new approach exploits properties of the Fourier Transform to search for the angle between the two scans independent of translation. By adopting this decoupled approach, ORI succeeded in significantly reducing the computational power required. The method - coined Fast Masking by Moving (f-MbyM), retains end-to-end differentiability and thus the use of a convolutional neural network (CNN) to mask radar scans, learning a radar scan representation explicitly optimised for RO.

Figure 1: Given radar scans f and g the system outputs the relative pose [θ,tx,ty] between them in three phases: (1) each radar scan is masked using a deep neural network; (2) the rotation θ is determined by maximising the correlation between the magnitude of their fourier transforms in polar co-ordinates; (3) the translation [tx,ty] is determined by maximising the correlation between f and g rotated by the now known angle θ. Using this approach it is possible to determine θ independently of [tx,ty] allowing real-time performance on a CPU or embedded devices. Crucially, this entire procedure is end-to-end differentiable allowing the explicit optimisation of their network for radar pose estimation.

For this project, ORI used both an Intel Core i7 laptop with integrated graphics as well as an embedded device with NVIDIA Jetson Nano GPU. For the latter the ORI used event profiling provided by PyTorch / CUDA, while for laptop a standard Python library was employed.

Project Results

To assess odometry accuracy, ORI followed the KITTI odometry benchmark [9] , where for each 100m segment of up to 800m long trajectories, it calculates the average residual translational and angular error for every test set sequence, normalising by the distance travelled. The performance across each segment and over all trajectories is then averaged to give us our primary measure of success.

To assess odometry accuracy, ORI followed the KITTI odometry benchmark [9] , where for each 100m segment of up to 800m long trajectories, it calculates the average residual translational and angular error for every test set sequence, normalising by the distance travelled. The performance across each segment and over all trajectories is then averaged to give us our primary measure of success. Comparing the run time efficiency of f-MbyM to MbyM, the benefits of adopting a decoupled approach becomes clear; considering a like-for-like comparison at each resolution ORI achieved speedups of 372% to 800% on the laptop Core i7 CPU and 424% to 470% on the embedded Jetson GPU. As the ORI approach runs faster it was able to use a model at a higher resolution while still maintaining real-time operation. ORI found that while increasing the resolution from 127 to 255Hz results in a significant reduction in end-point error, only a marginal reduction in error was seen when increasing from a resolution 255 to 511Hz. As f-MbyM at 255Hz runs significantly faster than f-MbyM at 511Hz, the former is considered to be the best performing model. This is in stark contrast to regular MbyM, which is unable to achieve real-time performance at any of the tested resolutions.

Figure 2: By adopting a decoupled search for angle and translation ORI was able to train significantly faster and with much less memory, as shown above. ORI averaged the time for each training step (excluding data loading) for MbyM and f-MbyM running on 255Hz resolution inputs across an epoch. This process is repeated, doubling the batch size each time, until a 12GB NVIDIA TTITAN X GPU runs out of memory. Whilst MbyM is only able to fit a batch size of 4 into memory, f-MbyM manages 64. Furthermore, the ORI also discovered that a training step for f-MbyM is roughly 4-7 times faster than for MbyM (a like-for-like comparison at each batch size).

Conclusions

In summary, training faster and with less memory, utilising a decoupled search allows f-MbyM to achieve significant run-time performance improvements on a CPU (168 %) and to run in real-time on embedded devices, in stark contrast to MbyM. Throughout, the ORI approach remains accurate and competitive with the best radar odometry variants available in the literature – achieving an end-point drift of 2.01% in translation and 6.3 deg /km on the Oxford Radar RobotCar Dataset. For further information, the entire white paper can be downloaded here.

References

[1] S. H. Cen and P. Newman, “Precise ego-motion estimation with millimeter-wave radar under diverse and challenging conditions,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), pp. 6045–6052, IEEE, 2018.

[2] S. H. Cen and P. Newman, “Radar-only ego-motion estimation in dif- ficult settings via graph matching,” in 2019 International Conference on Robotics and Automation (ICRA), pp. 298–304, IEEE, 2019.

[3] R. Aldera, D. De Martini, M. Gadd, and P. Newman, “Fast radar motion estimation with a learnt focus of attention using weak super- vision,” in 2019 International Conference on Robotics and Automation (ICRA), pp. 1190–1196, IEEE, 2019.

[4] K. Burnett, A. P. Schoellig, and T. D. Barfoot, “Do we need to compensate for motion distortion and doppler effects in spinning radar navigation?,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 771–778, 2021.

[5] K. Burnett, D. J. Yoon, A. P. Schoellig, and T. D. Barfoot, “Radar odometry combining probabilistic estimation and unsupervised feature learning,” in Robotics: Science and Systems, 2021.

[6] D. Barnes and I. Posner, “Under the radar: Learning to predict robust keypoints for odometry estimation and metric localisation in radar,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), pp. 9484–9490, IEEE, 2020.

[7] D. Adolfsson, M. Magnusson, A. Alhashimi, A. J. Lilienthal, and H. Andreasson, “Cfear radarodometry-conservative filtering for effi- cient and accurate radar odometry,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 5462–5469, IEEE, 2021.

[8] D. Barnes, R. Weston, and I. Posner, “Masking by moving: Learning distraction-free radar odometry from pose information,” arXiv preprint arXiv:1909.03752, 2019.

[9] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3354–3361, IEEE, 2012.

The Scan Partnership

Scan has been supporting ORI robotics research as an industrial member since 2020. Scan provides a cluster of NVIDIA DGX and EGX servers and AI-optimised PEAK:AIO NVMe software-defined storage, to further robotic knowledge and accelerate development. This cluster is overlaid with Run:ai cluster management software in order to virtualise the GPU pool across the compute nodes to facilitate maximum utilisation, and to provide a mechanism of scheduling and allocation of ORI workflows’ across the combined GPU resource. Access to this infrastructure is delivered via the Scan Cloud platform, hosted in a secure UK datacentre.

Project Wins

Run-time performance improvements using faster training with less memory

Results comparable with the best radar odometry variants available in the literature

Time and cost savings generated due to access to GPU-accelerated cluster

Rob Weston

DPhil student, Applied AI Lab (A2I), ORI

"Being able to run multiple experiments in parallel allows for rapid prototyping facilitating the rapid testing of new ideas and a thorough examination of the solution space. In my own research the Scan cluster has been essential for this.

Professor Ingmar Posner

Head of the Applied AI Group, ORI

"We are delighted to have Scan as part of our set of partners and collaborators who are equally passionate about advancing the real-world impact of robotics research. Integral involvement of our technology and deployment partners will ensure that our work stays focused on real and substantive impact in domains of significant value to industry and the public domain."

Speak to an expert

You’ve seen how Scan continues to help the Oxford Robotics Institute further its research into the development of truly useful autonomous machines. Contact our expert AI team to discuss your project requirements.

phone_iphone Phone: 01204 474210

mail Email: [email protected]