Server Buyers Guide

Scan has a large range of servers available, built to your requirements by 3XS Systems. While our intuitive configurators give you ultimate flexibility to choose the components and specification you require, are you always aware how any given choice could impact the overall performance of your server? Understanding exactly which components are the best fit for particular workloads may help you select a better server - similarly considering future expansion or flexibility may result in you choosing a different model altogether.

This guide will take you through all the major components of a server build and highlight potential areas to consider while you choose each of them. We’ll also cover accessories and some wider infrastructure points too, as these may all place a part in the performance and suitability of your server. Let’s begin.

What is a Server?

Servers act as the core for any organisation’s network - where all centrally controlled processes are carried out. This can range from storing all files and providing back-up and archive functions, through to delivering website capabilities. They are usually split into one of three main types depending on what the usage of the server will be.

|

|

|

|---|---|---|

Tower ServersServers in this format are usually designed for a small office where there may not be a dedicated rack cabinet or server room. A server like this may also do multiple tasks, so they tend to occupy a larger chassis allowing for greater internal storage space for disk drives. |

Rack ServersA rack server is designed to be installed in a 19in rack cabinet and will usually be located alongside other servers and network equipment. The more compact rack chassis design reflects the fact that this type of server will be dedicated to few or even a single job. Less space is required for storage as this is often external and shared amongst several rack servers. Larger chassis are available to compute accommodate acceleration devices such as GPUs. |

Multi Node ServersAlthough these are rack mounted chassis too, they differ as the chassis will contain multiple dense servers designed for very specific compute tasks. They are some times referred to as clusters and usually employed for CPU intensive workloads, where each node (distinct server) in the cluster works with the others to provide a greater level of compute power in a more dense rack space. |

Servers differ from desktop PC and workstation machines in that they are designed to operate 24 hours a day, seven days a week. This ‘always on’ capability is delivered by having server-grade or enterprise-class’ components - internal parts that are designed to constantly be in use, and cope with the constant workload of the server. This higher grade specification of components also delivers better residency and reliability, so it is never wise to build a server using standard PC parts. It is true that high-end workstations can employ server-grade CPUs, memory and strange drives too, but this is usually when the workstation is critical, so in effect warrants the extra reliability server parts will bring.

All 3XS servers are built using server-grade components from market leading manufacturers, and are fully tested prior to shipment. It is worth mentioning that these are identical components to those used in common server brands such as Cisco, Dell, Fujitsu and HPE, however 3XS offers greater customisation during the configuration process.

Server CPUs

The Central Processing Unit, or the CPU, is one of the most critical components to consider when building a new server. It governs the majority of the servers performance when it come to compute tasks and directly determines the degree of multitasking that can be performed by the server corresponding to how many cores the CPU may have. There are two main options to choose from when considering server CPUs - AMD EPYC and Intel Xeon.

AMD EPYC CPUs

The AMD EPYC series of processors set the new standard for the modern datacentre. Featuring leadership architecture, performance, and security, AMD EPYC processors help customers turbocharge application performance, transform datacentre operations and help secure critical data. You can learn about the specific models in our AMD EPYC CPU BUYERS GUIDE .

Intel Xeon CPUs

The Intel Xeon 6 processor family introduces an innovative modular architecture that allows datacentre architects to configure and deploy servers that are purpose-built for your unique needs and workloads across private, public and hybrid clouds. Intel Xeon 6 processors have either Performance-cores (P-cores) for maximum performance or Efficient-cores (E-cores) for maximum efficiency in power- and space-constrained environments. You can learn about the specific models in our INTEL XEON CPU BUYERS GUIDE .

Server Memory

There are a number of key things to consider when choosing server memory modules. Firstly is how many you choose - filling up the server with low-capacity memory modules, means you will not be able to add memory modules in the future. You will have to remove the existing memory modules and buy new ones to achieve maximum capacity. Furthermore, the greater number of modules you have will draw more power and if all the slots are full the memory speed will be capped as the high number of modules are all accessed. A better configuration is a smaller number of larger capacity modules, meaning better performance and room for expansion if required.

Second, is the type of memory or DIMMs (dual inline memory modules) to choose.

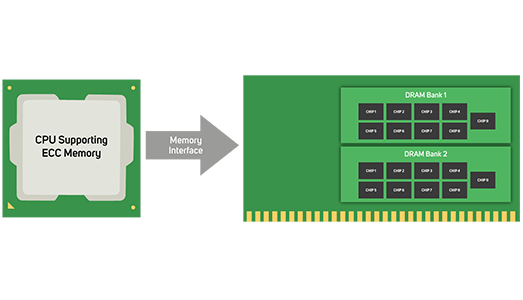

UDIMM

UDIMM or Unbuffered DIMM acts so the address and control signals can directly reach the DRAM chip on the server DIMM without going through a buffer and without any timing adjustment. When data is transmitted from the CPU to the DRAM chips on the DIMMs, the UDIMM needs to ensure that the transmission distance between the CPU and each DRAM chip is equal. UDIMMs are lower in capacity and frequency and have lower latency - however, UDIMM cannot maximise server performance because it only operates in unbuffered mode and thus cannot support the maximum RAM capacity.

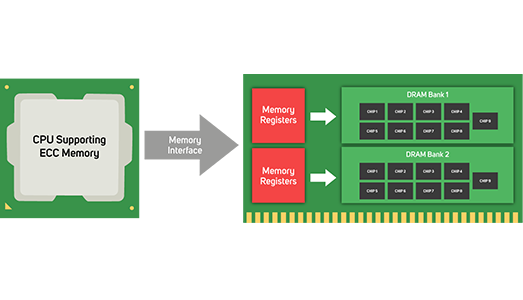

RDIMM

RDIMM or Registered DIMM attaches a register between the CPU and the DRAM chip for data transmission, which reduces the distance of parallel transmission and improves transmission efficiency. RDIMMs are easier to increase in capacity and frequency than UDIMMs due to their high register efficiency. Moreover, RDIMM supports buffered and high-performance registered mode, making it more stable than UDIMM. This gives it the highest-capacity server RAM performance and a wide range of applications in the server RAM market.

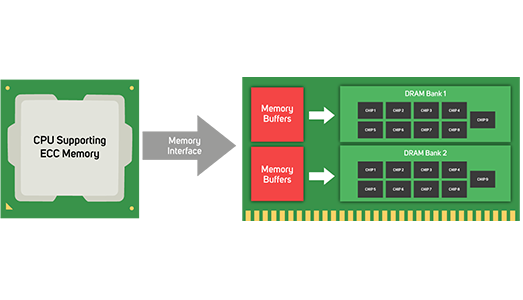

LRDIMM

LRDIMM or Load Reduced DIMM do not use registers but are simply buffered. The buffering reduces the power load on the underlying motherboard but has little effect on memory performance. The direct benefit is to reduce the server memory busload and further increase the server RAM support capacity. This not only reduces the load and power consumption of the memory bus but also provides the maximum supported capacity of server RAM.

ECC DIMM

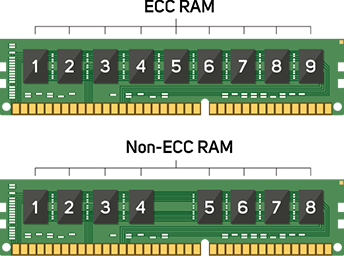

Error Correction Code or ECC memory uses a mathematical process that ensures the data stored in memory is correct. In the case of an error, ECC memory also allows the system to recreate the correct data in real time. ECC uses a form of parity, which is a method of using a single bit of data to detect errors in larger groups of data, such as the typical eight bits of data used to represent values in a computer memory system. ECC memory looks different to non-ECC memory as it has an extra chip that performs the ECC calculations. It has particular value in a business environment as its ability to detect and correct memory errors helps fight data corruption and reduce system crashes and outages of servers and datacentres. ECC memory must be matched otherwise the error correcting capability will be lost. ECC memory is not always registered or buffered, however, all registered memory is ECC memory.

Accelerator Cards

These add-in-cards cards fit within a server's PCIe slots and are designed to literally accelerate a process - usually visual or compute related - in order to drive better application performance. Both visual and analytical tasks rely on parallel compute power where many calculations can be carried out simultaneously, and much faster than a CPU can achieve. This is due to acceleration cards having many more processing cores than a CPU will have - think thousands rather than tens.

There are a number of add-in accelerator cards that can be configured within your 3XS server to tackle these intensive tasks and depending on the chassis chosen combinations from two to ten cards are possible.

NVIDIA GPU Accelerators

NVIDIA offer a wide range of datacenter GPUs to suit all budgets, applications and workloads. The processing power and capability of any given card will be determined by the architecture of the card, the number and type of cores and the GPU memory - you can compare individual cards and their suitability for given workloads by reading our NVIDIA DATACENTRE GPU BUYERS GUIDE .

AMD Xilinx Accelerators

The AMD Xilinx Alveo range of accelerator cards deliver compute, networking, and storage acceleration in an efficient small form factor, and available with 100GbE networking, PCIe 4, and HBM2 memory. Designed to deploy in any server, they offer a flexible solution designed to increase performance for a wide range of datacentre workloads.

Intel FPGA Accelerators

Intel FPGA-based accelerator cards provide hardware programmability on production qualified platforms, so data scientists can design and deploy models quickly, while allowing flexibility in a rapidly changing environment. Complete with a robust collection of software, firmware, and tools designed to make it easier to develop and deploy FPGA accelerators for workload optimisation in datacentre servers.

Accelerator Cards

If your intended server is for HPC, deep learning or AI workflows then it may be worth reading our dedicated section on CUSTOM AI & HPC SERVERS .

Server Drives

The type and capacity of any internal storage required in a server will very much depend on what the intended use of the server is. There are two types of drives - hard disk drives (HDD) and solid state drives (SSD), the former features spinning disk platters on which the data is stored, whereas the latter has no moving parts and stores data on NAND flash components. Each type has its advantages and disadvantages depending on the cost effectiveness or performance required.

| HDD | SSD | Comparison | |

|---|---|---|---|

| Performance | Hundreds of MB/sec | Thousands of MB/sec | SSDs are much faster |

| Access Times | 5-8ms | 0.1ms | SSDs have almost no latency |

| Reliability | 2-5% failure rate | 0.5% failure rate | SSDs much more reliable |

| Resilience | Susceptible to vibrations | No moving parts | SSD much safer to install in a laptop |

| Energy Use | 6-15W | 2-5W | SSD much more energy efficient |

| Noise | 20-40dB | Silent | No noise from SSD |

| Capacity | Up to 26TB | Up to 15TB | Similar in maximum capacities |

| Cost | £-££ | ££ - ££££ | SSD more expensive, especially at high capacities |

There are also multiple types of format and interface that can be employed - again each more or less suitable for any given usage and workload. The common choices are either SATA, SAS, M.2 or U.2 connections in either 3.5in, 2.5in or M.2 formats. There is also an option to have SSD internal storage in the form of a PCIe add-in card.

| Form Factor | SATA | SAS | U.2 NVMe | M.2 NVMe |

|---|---|---|---|---|

| 3.5in | Yes |

Yes |

No |

No |

| 2.5in | Yes |

Yes |

Yes |

No |

| U.2 / U.3 NVMe | Yes |

No |

No |

Yes |

Within a server it is common that the operating system (OS) will sit on separate drives to the applications and data, so with this in mind an M.2 SSD is ideal. Firstly large capacities are not required for the OS, and secondly it keeps traditional drive bays free for data storage. It is also worth considering a second SSD to provide redundancy and failover for the OS. For data storage it again depends on usage: performance-sensitive data is best stored on SSDs while HDDs are best used for archiving data.

3XS server systems can be configured using datacentre-class drives from leading brands including Micron, Samsung, Seagate and WD.

Data Security

When considering hard disk drive or solid state drive purchases for a PC, workstation, server or NAS, it is vital to understand about how best to protect the data on your drives. This can be achieved in a number of ways using RAID technology. RAID stands for redundant array of independent disks and it is essentially spreading the data over multiple drives to remove the chance of a single point of failure.

It works by blocks of data, referred to as ‘parity’ blocks, being distributed across the multiple drives so that in the event of failure of any one drive the parity blocks can be used to retrieve the lost data and rebuild the array. RAID levels are categorised by number and their attributes vary with each type.

RAID 0

RAID 0 is the fastest RAID mode since it stripes data across all of the array’s drives and as the capacities of each drive are added together it results in the highest capacity of any RAID type. However, RAID 0 lacks a very important feature - data protection. If one drive fails, all data becomes inaccessible, so while RAID 0 configuration may be ideal for gaming where performance matters but data is not critical, it is not recommended for storing critical data.

RAID 1

RAID 1 works across a maximum of two drives and provides data security since all data is written to both drives in the array. If a single drive fails, data remains available on the other drive, however, due to the time it takes to write data multiple times, performance is reduced. Additionally, RAID 1 reduces disk capacity by 50% since each bit of data is stored on both disks in the array. RAID 1 configurations are most commonly seen when mirroring drives that contain the operating system (OS) in enterprise servers, providing a back-up copy.

RAID 5

RAID 5 writes data across all drives in the array and to a parity block for each data block. If one drive fails, the data from the failed drive can be rebuilt onto a replacement drive. A minimum of three drives is required to create a RAID 5 array, and the capacity of a single drive is lost from useable storage due to the parity blocks. For example, if four 2TB drives were employed in a RAID 5 array, the useable capacity would be 3x 2TB = 6TB. Although some capacity is lost, the performance is almost as good as RAID 0, so RAID 5 is often seen as the sweet spot for many workstation and NAS uses.

RAID 6

RAID 6 writes data across all drives in the array, like RAID 5, but two parity blocks are used for each data block. This means that two drives can fail in the array without loss of data, as it can be rebuilt onto replacement drives. A minimum of four drives is required to create a RAID 6 array, although due to the dual parity block, two drives capacities are lost - for example if you had five 2TB drives in an array, the usable capacity would be 3x 2TB = 6TB. Typically due to this security versus capacity trade-off, RAID 6 would usually only be employed in NAS appliances and servers where data critical.

RAID 10

RAID 10 is referred to as a nested RAID configuration as it combines the protection of RAID 1 with the performance of RAID 0. Using four drives as an example, RAID 10 creates two RAID 1 arrays, and then combines them into a RAID 0 array. Such configurations offer exceptional data protection, allowing for two drives to fail across two RAID 1 segments. Additionally, due to the RAID 0 stripe, it provides users high performance when managing greater amounts of smaller files, so is often seen in database servers.

RAID 50

RAID 50 is referred to as a nested RAID configuration as it combines the parity protection of RAID 5 with the performance of RAID 0. Due to the speed of RAID 0 striping, RAID 50 improves upon RAID 5 performance, especially during writes, and also offers more protection than a single RAID level. RAID 50 is often employed in larger servers when you need improved fault tolerance, high capacity and fast write speeds. A minimum of six drives is required for a RAID 50 array, although the more drives in the array the longer it will take to initialise and rebuild data due to the large storage capacity.

RAID 60

RAID 60 is referred to as a nested RAID configuration as it combines the double parity protection of RAID 6 with the performance of RAID 0. Due to the speed of RAID 0 striping, RAID 60 improves upon RAID 6 performance, especially during writes, and also offers more protection than a single RAID level. RAID 60 is often employed in larger server deployments when you need exceptional fault tolerance, high capacity and fast write speeds. A minimum of eight drives is required for a RAID 60 array, although the more drives in the array the longer it will take to initialise and rebuild data due to the large storage capacity.

Systems that support RAID arrays will usually have a hot-swap capability, meaning that a failed drive can be removed from the array without powering the system down. A new drive is put in the failed arrives place and the array rebuild begins - automatically. You can also configure a hot spare drive - an empty drive that sits in the array doing nothing until a drive fails, meaning that the rebuild can start without the failed drive being removed first.

It is also worth mentioning that multiple RAID arrays can be configured in a single system - it may be that RAID 1 is employed to protect a pair of SSDs for the OS, whereas multiple drives are protected by RAID 6 including hot spare drives too. Ultimately however, the RAID configuration(s) you choose need to be controlled, either by software on the system or additional hardware within it. Let’s take a look at the options.

Hardware RAID

In a hardware RAID setup, the drives connect to a RAID controller card inserted in a PCIe slot or integrated into the motherboard. This works the same for larger servers as well as workstations and desktop computers, and many external drive enclosures have a RAID controller built in. High-end hardware RAID controllers can be upgraded with a cache protector, these comprise a small capacitor which in the event of power loss keeps powering the cache memory on the RAID controller for as long as three years. Without a cache protector, data stored in the RAID controllers cache will be lost and could cause data corruption.

| Advantages | Disadvantages |

|---|---|

|

• Better performance, especially in more complex RAID configurations. Processing is handled by the dedicated RAID processor rather than the CPUs which results in less strain on the system when writing backups, and less downtime when restoring data • Has more RAID configuration options including hybrid configurations which may not be available with certain OSes • Compatible across different OSes. This is critical if you plan to access your RAID system from say, Mac and Windows. Hardware RAID would be recognisable by any system. |

• Since there’s more hardware, there’s more cost involved in the initial setup

• Inconsistent performance for certain hardware RAID setups when using SSDs • Older RAID controllers disable the built-in fast caching functionality of SSDs that are needed for efficient programming and erasing onto the drive |

Chipset RAID

Many AMD and Intel motherboard chipsets support some of the basic types of RAID, potentially negating the need for a hardware RAID controller.

| Advantages | Disadvantages |

|---|---|

|

• No additional cost - all you need to do is connect the drives and then configure them in the BIOS

• Modern CPUs are so powerful they can easily handle RAID 0 & 1 with no noticeable performance hit |

• You’re restricted to the RAID levels your motherboard chipset supports • Performance hit if you’re using more complex RAID configurations • Limited performance and resilience compared to hardware RAID controller • If the motherboard dies you lose access to the RAID array |

Software RAID

The third and final type of RAID array is called software RAID and is when you use the operating system to create a RAID. Numerous operating systems support RAID, including Windows and Linux.

| Advantages | Disadvantages |

|---|---|

|

• No additional cost - all you need to do is connect the drives and then configure them in the OS

• Modern CPUs are so powerful they can easily handle RAID 0 & 1 with no noticeable performance hit |

• Software RAID is often specific to the OS being used, so it can’t generally be used for drive arrays that are shared between operating systems • You’re restricted to the RAID levels your specific OS can support • Performance hit if you’re using more complex RAID configurations • If the OS dies you lose access to the RAID array |

Server Network Cards

A network interface card (NIC), is an add-in card for a server to enable it to connect to the wider network and outside world. Although most servers include a basic network card, connectivity and performance are linked, so changing or upgrading NICs is common practice.

If you are making network connections to a corporate network, then Ethernet is normally adequate, but you may want more ports on the NICs or more NICs - both are ways of adding redundancy and resilience into your server and network, as you are making multiple connections in case one should fail. If you are dealing with demanding tasks such as HPC or AI then InfiniBand is the optimum standard rather than Ethernet. Similarly, a Fibre Channel connection is often used when dealing with high-end storage, so in both these cases you will need to configure NICs to suit your needs. The below tabs look at each technology in brief but you can learn more about specific cards by reading our dedicated NETWORK CARD BUYERS GUIDE .

Ethernet

Ethernet Ethernet is the most common form of communication seen in a network and has been around since the early 1980s. Over this time the speeds of available Ethernet connections has vastly increased. The initial commonly available NICs were capable of 10 megabits per second (10Mbps), followed by 100Mbps and Gigabit Ethernet (1GbE or 1000Mbps). In a corporate network, 1GbE has long been the standard, with faster 10GbE, 25GbE, 40GbE and 50GbE speeds also being available. The last few years have seen speeds of Ethernet increase to 100GbE, 200GbE and recently 400GbE.

InfiniBand

InfiniBand InfiniBand is an alternative technology to Ethernet. Developed in the late 1990s it is usually used in HPC and AI clusters where high bandwidth and low latency are key requirements. Although an InfiniBand NIC fits in a server the same way and works in a similar way to an Ethernet NIC to transfer data, they historically have achieved improved throughput by not needing to use the server CPU to control data transmission, hence latency is reduced by removing this step. Like Ethernet there have been several generations of InfiniBand starting with SDR (Single Data Rate) providing 2.5Gbps throughput. This has since been superseded by DDR - 5Gbps, QDR - 10Gbps, DR - 14Gbps, EDR - 25Gbps, HDR - 50Gbps, NDR – 1100Gbps, XDR – 200Gbps and GDR – 400Gbps.

Fibre Channel

Fibre Channel Fibre Channel (FC) is another high-speed networking technology primarily used for transmitting data among datacentres, computer servers, switches and storage at data rates of up to 128Gbps. Fibre Channel was seen as the leading technology for a Storage Area Network (SAN) and as it differed entirely from Ethernet servers would need an Ethernet NIC to communicate with the wider network and an FC NIC (or Host Bus Adapter - HBA) to communicate with the SAN. More recently, an alternative form of FC called Fibre Channel over Ethernet (FCoE) was developed to lower the cost of FC solutions by eliminating the need to purchase separate HBA hardware. A Fibre Channel HBA would have similar fibre optical interfaces to this now seen on the highest speeds of Ethernet and InfiniBand, just without the need for an SFP module.

Server Operating Systems

Servers will usually run on either a Windows Server or Linux operating system, depending on whether the server is aimed towards more regular process and storage need or a high-end compute use like data science calculations.

Microsoft Windows Server 2022

Windows Server 2022 is the latest operating system (OS) for servers from Microsoft. To cover small office servers right up to datacentre deployments, it is available in three versions - Windows Server 2022 Essentials, Windows Server 2022 Standard and Windows Server 2022 Datacenter.

With up to 25 users or 50 devices, the Essentials edition is the perfect server OS for small businesses. It includes many of the features of the larger editions, such as Windows Admin Centre and System Insights. Rather than a license-based model, Essentials is just a single purchase. The Standard edition is aimed at users who only need a few Windows Server virtual machines. In addition to installation on the physical hardware, two virtual machines running Windows Server are also possible with the server license. There is no limit on Linux VMs. Nearly all features and server roles from the Datacentre Edition are also included in the Standard version. One exception is Storage Spaces Direct. Finally with the Datacenter edition, users have the greatest possible flexibility in server deployment and can realise large, rapidly changing workloads. The number of VMs is unlimited and software-defined storage can be implemented using Storage Spaces Direct.

| Windows 2022 Edition | Ideal for | Licensing Model | CAL Requirements | Cost |

|---|---|---|---|---|

| ESSENTIALS | Small businesses with up to 25 users and 50 devices | No License required | No CAL required | £ |

| STANDARD | Physical or minimally virtualised environments | Per CPU core | Windows Server CAL | ££ |

| DATACENTRE | Highly virtualised datacentres and cloud environments | Per CPU core | Windows Server CAL | £££ |

To learn more about the feature sets of the three version and the licensing requirements for CPU cores and users or devices, please read our dedicated Windows Server 2022 buyers guide.

Linux

Alternatively if your server is intended to be used for deep learning and AI or HPC workloads then it is advisable to use Linux. There are many distributions of Linux available, and our 3XS Systems team can advise which is best for your given use.

Professional Services

Although all our 3XS servers come as standard with a comprehensive 3-year (1st year onsite) warranty, this can be extended to provide extra cover if required. Additional services can also be provided such as installation, remote monitoring capabilities or data science consultancy.

Installation

Scan specialists will aid with the installation of any new infrastructure, from simple single device installations all the way through to installing complete racks of equipment.

Data Scientist

Scan will help effect change by building up a client’s analytical skills, developing competencies, and understanding of the intricacies of their business, through the analyses and interpretation of complex digital data.

Remote Monitoring

Complex environments bring with them unpredictability and vulnerability, clear reasons to manage and monitor them efficiently. Scan will have visibility over your data centre/network’s functionality and operations and take a proactive approach to stay ahead of the outages, not just tackle them as they occur.

To learn more about our full service offering for your servers and wider infrastructure, please visit our professional services section .

External Server Storage

As previously mentioned it is not uncommon for storage capacity to expand outside the confines of the server chassis. This may be in addition to the internal storage the server has to offer, or if significant fast flash storage is required, then a network storage system may be the best approach. 3XS servers can be configured as dedicated storage devices or to compliment a wide variety of storage systems from a number of leading brands.

NAS

Network Attached Storage (NAS) systems are the simplest way to add extra storage capacity. Usually connected over Ethernet, we offer various NAS options from both QNAP and Synology in 4- to 16-drive bay versions. You can learn more about NAS solutions in our dedicated NAS BUYERS GUIDE .

Tiered Storage

Tiered storage is designed to act as fast access, regular storage and archive in a single solution. Different drives types are used to achieve the parameters needed - NVMe SSDs for hot data, SATA SSDs for the bulk storage, and cost-effective HDDs for the archive capacity. These tiered devices are available from our partners Dell-EMC, NetApp and DDN, where inbuilt software then fluidly moves data between the tiers to maximise performance and access.

All Flash Storage

All flash storage arrays solely use SSDs - either SATA or NVMe - to ensure rapid data transfer to the connected servers. We supply solutions from Dell-EMC, NetApp and DDN in various capacities which can be configured with either Ethernet or InfiniBand network connections. They also offer rich software feature sets including de-duplication, snapshot technology and cloud integration.

AI Optimised Storage

Storage optimised for AI is in many ways similar to the all-flash arrays, however the main difference is that only NVMe SSDs are employed for maximum data throughput, ensuring GPUs in connected servers are utilised to the full extent. These solutions operate specific PEAK:AIO software that is dedicated to delivering maximum data throughput and GPU utilisation. Solutions from 3XS and Dell are available in various capacities and feature low latency InfiniBand connectivity.

Server Networking and Infrastructure

As mentioned earlier the server or servers act as the core of the network in any organisation, however as everything else needs to access them via their installed NICs. How a server connects to a switch and other elements in the network is key to overall performance of the entire infrastructure.

Switches

Network switches are devices that connect multiple PCs, workstations, servers or storage devices to enable them to communicate within an organisation and share and access the Internet connection to the wider world. Switches may also be used to connect other network capable or IP (Internet Protocol) devices such as wireless access points, surveillance cameras, phones and video conferencing system. As you may expect switches are available in the same networking standards as server NICs and storage appliances - Ethernet and InfiniBand - and with various throughput capabilities and feature sets. It is essential to get the ones that best support your hardware devices across the network. You can learn more about choice of switches in our dedicated NETWORK SWITCHES BUYER GUIDE .

UPS

An Uninterruptible Power Supply (UPS) is a device that sits between the power source and the servers. Its job is to ensure they receive a consistent and clean power supply, whilst also protecting them from power surges and power failures. A surge could damage components within the server and a failure could interrupt data being saved on the device, resulting in errors. The battery runtime of most UPSs is relatively short - 5 to 15 minutes being typical—but sufficient to allow time to bring an auxiliary power source online, or to properly shut down your servers and other devices. Much like servers they are available in either tower or rackmount formats - to learn more about correctly sizing a UPS, extended runtime options and connections to your servers please read our dedicated UPS BUYER GUIDE .

Rack Cabinets

Even in a smaller office a rack cabinet provides a secure environment for your servers, storage, switches and UPS. They also work to keep cabling simple and clear and stop unauthorised users from accessing the business infrastructure. Cabinets can be configured to a range of heights - typically 14U - 47U, and with a variety of sides, shelves and mounts for power distribution units (PDUs).

A PDU or ePDUs if network connected, acts to provide power to each connected device within a rack. They fit vertically down the side of a rack cabinet and provide power connectivity from the UPS. They not only help keep power cabling neat and manageable, they can offer monitoring capability and even be metered if billing is being conducted within a hosted datacentre environment.

Ready to buy?

We hope you’ve found our guide to custom built 3XS servers useful, giving you the additional knowledge to get the most out of our intuitive server configurators. Click below to see our great ranges of servers designed for many popular workloads.

If you would still like some advice on configuring your ideal server, don’t hesitate to contact our friendly advisors on 01204 474747 or by contacting [email protected] .